Half the AI Agent Market Is One Category. The Rest Is Wide Open.

Anthropic’s new data shows software engineering dominates agentic AI. For founders, that’s not a warning. It’s a treasure map.

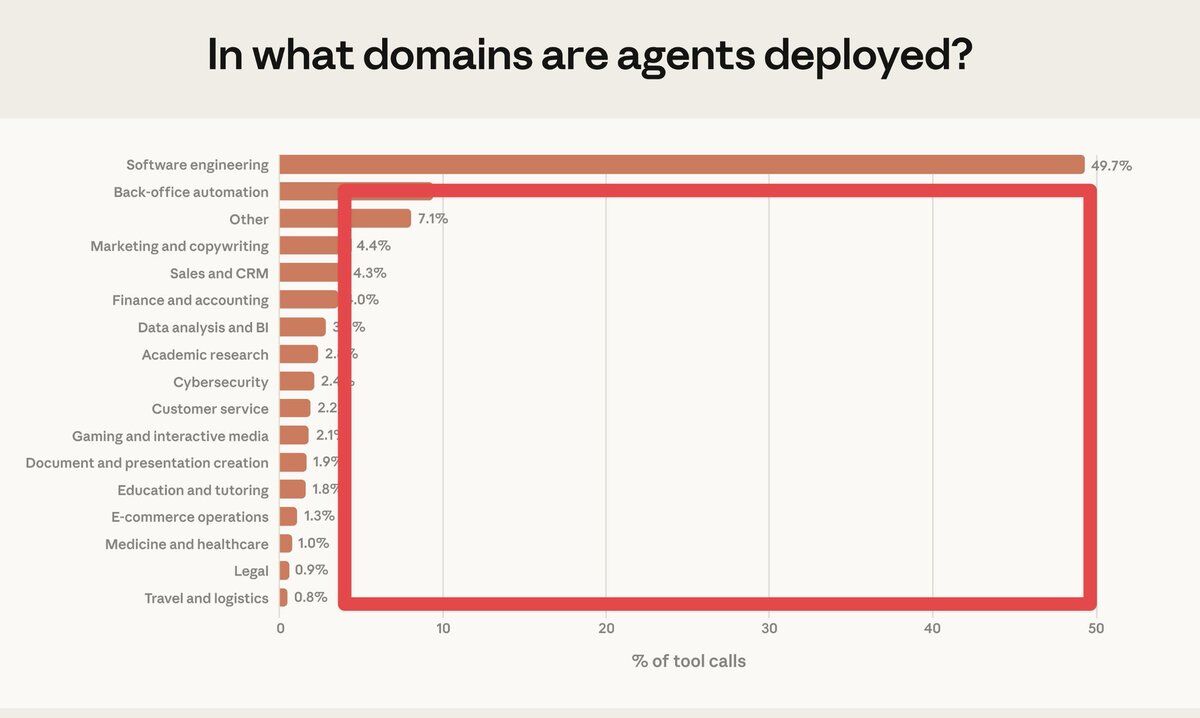

Anthropic's data showing software engineering commanding nearly half of all AI agent tool calls — while healthcare, legal, and a dozen other verticals each claim under 5% — is what Han Wang calls the greenfield opportunity most founders are overlooking.

Source: x.com

Anthropic's data showing software engineering commanding nearly half of all AI agent tool calls — while healthcare, legal, and a dozen other verticals each claim under 5% — is what Han Wang calls the greenfield opportunity most founders are overlooking.

Source: x.com

TL;DR

Software engineering accounts for nearly 50% of all AI agent tool calls. Healthcare, legal, finance, and a dozen other verticals are barely touched, each under 5%. That’s 300 vertical AI unicorns waiting to be built.

If I were starting a company today, I’d stare at the red rectangular area of the bar chart above until I saw my future.

Archived tweetThis chart is a good reminder of how much opportunity there is in AI agents right now. There will be plenty of horizontal opportunities for agents, but equally many workflows that need deep domain expertise to actually make the user successful at automating the unique processes in their vertical. The template is to build agentic software that taps into proprietary data, handles the workflow in a way that bridges the user and the agent collaboration effectively, and has a deep domain-specific context engineering, and the ability to drive change management for customers. There still are huge openings in many categories. [Quoting @handotdev]: what I would be working on if I started another company today https://t.co/kKDFxcbtZv https://t.co/7dpDyiHAW6

Aaron Levie @levie February 21, 2026

Software engineering owns half of all AI agent activity. The other half is scattered across 16 verticals, none above 9%. Healthcare is 1%. Legal is 0.9%. Education is 1.8%. These aren’t saturated markets. They’re markets that barely exist.

Anthropic just published the most comprehensive study of how AI agents actually work in the wild. The headline: software engineering accounts for 49.7% of agentic tool calls on their API. The buried lede: everything else is greenfield.

The Deployment Overhang

Here’s what should make founders salivate: the models are already more capable than users trust them to be.

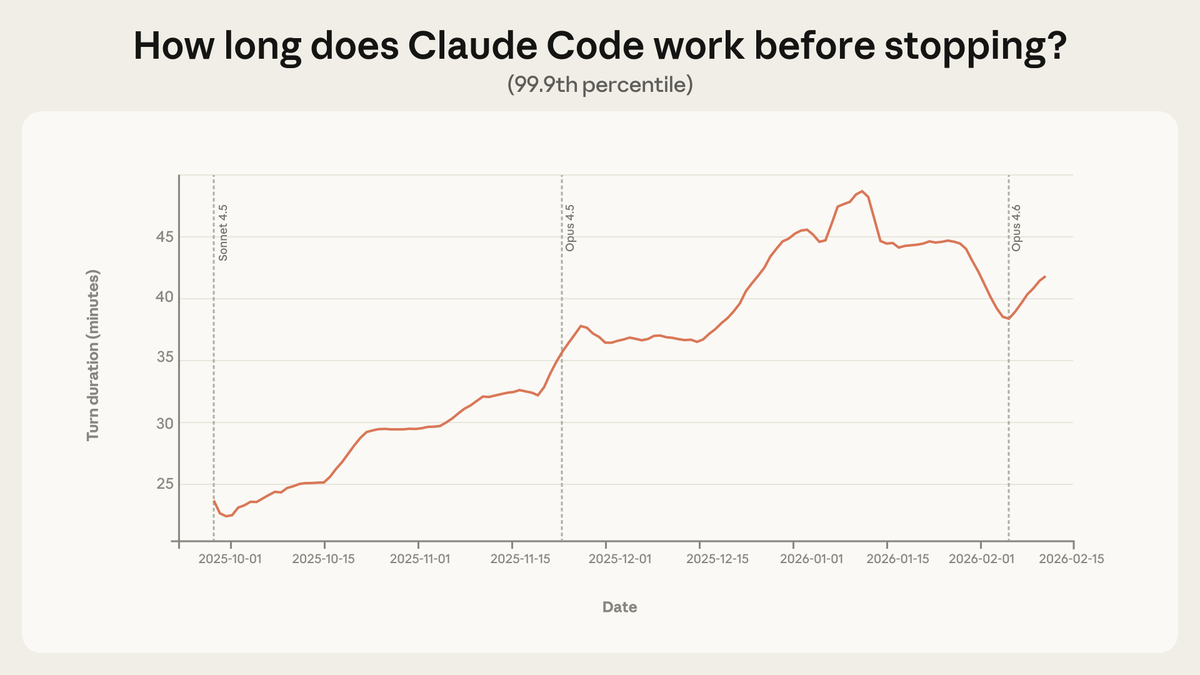

METR’s capability assessments show Claude can solve tasks that would take a human nearly five hours. But in practice, the 99.9th percentile session runs only about 42 minutes. That gap, between what AI can do and what we let it do, is a massive opportunity.

Between October 2025 and January 2026, the 99.9th percentile turn duration nearly doubled, from under 25 minutes to over 45 minutes. The growth is smooth across model releases. This isn’t just better models. It’s users extending trust, session by session, as they learn to work alongside agents.

The capability is there. The deployment isn’t. That’s not a problem. That’s a product opportunity.

How Trust Actually Evolves

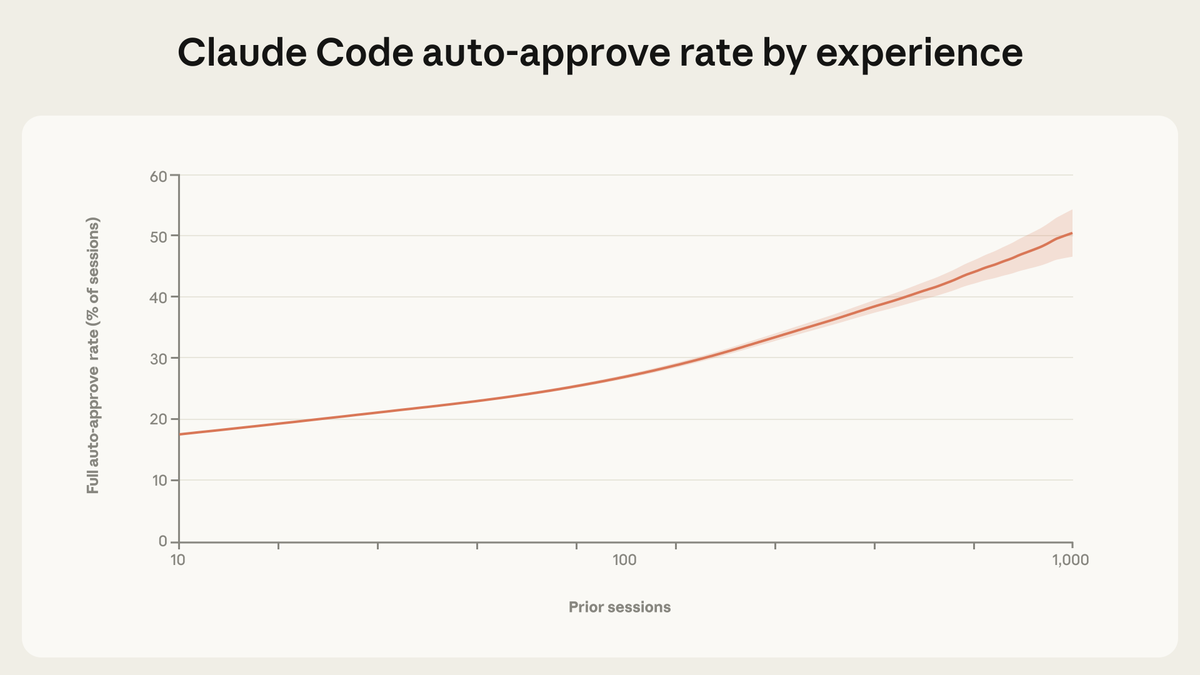

New users approve 20% of Claude Code sessions automatically. By 750 sessions, over 40% run on full auto-approve. But here’s the counterintuitive finding: experienced users also interrupt MORE, not less. New users interrupt 5% of turns. Veterans interrupt 9%.

This isn’t a contradiction. It’s a shift in oversight strategy. Beginners approve each step before it happens. Veterans delegate and intervene when something goes wrong. They’ve moved from pre-approval to active monitoring.

And here’s the safety finding that matters: on complex tasks, Claude Code asks for clarification more than twice as often as humans interrupt it. The agent is pausing to check, not barreling ahead. That’s a feature, not a bug.

Levie’s Vertical AI Playbook

Aaron Levie points to the untold wealth and value ready to be unlocked. Build agentic software that taps into proprietary data. Make the software actually work for real people and problems. Stuff that context to maximize intelligence coming out. And, the part most founders miss: drive change management for customers.

That last piece is why vertical AI is so defensible. Anyone can build a wrapper. Few can navigate the specific workflows, regulatory constraints, and organizational friction of healthcare billing or legal discovery or construction permitting.

SaaS has grown 10x per decade for a few decades now. Over 40% of VC dollars in the past 20 years went to SaaS companies. We produced 300+ SaaS unicorns. The thesis is simple: every one of those unicorns has a vertical AI equivalent waiting. And the AI versions could be 10x larger, because they don’t just replace software, they replace the operators too.

The Co-Construction Insight

Anthropic’s core finding deserves attention from anyone writing AI policy. Autonomy isn’t a property of the model. It’s co-constructed by the model, the user, and the product. Pre-deployment evaluations can’t capture this. You have to measure in the wild.

Archived tweetSoftware engineering makes up ~50% of agentic tool calls on our API, but we see emerging use in other industries. As the frontier of risk and autonomy expands, post-deployment monitoring becomes essential. We encourage other model developers to extend this research. https://t.co/p8pOjgJPrh

Anthropic @AnthropicAI February 18, 2026

The numbers are reassuring on safety: 73% of tool calls have a human in the loop. Only 0.8% of actions are irreversible. The riskiest deployments, things like API key exfiltration or autonomous crypto trading, are mostly security evaluations, not live production.

Policy that mandates “approve every action” will kill the productivity gains without adding safety. The better target is ensuring humans can monitor and intervene, not mandating specific approval workflows.

Where the Unicorns Are Hiding

The map is drawn. Software engineering is spoken for. Healthcare, legal, finance, education, customer service, logistics, 16 verticals with single-digit market share each, are waiting for someone to build the domain expertise into the agent.

300 SaaS unicorns came before. 300 vertical AI unicorns are coming next. The founders who pick a vertical, build domain expertise into their agents, and figure out change management will own the next decade of enterprise software.

The models can already work for five hours. Users only let them work for 42 minutes. That’s an indicator: we are so early, and there is a lot more to build, and in so many places that haven’t even seen a single minute of intelligence in action.

Related Links

-

Measuring AI agent autonomy in practice (Anthropic)

-

Garry Tan on vertical AI as the next 10x (@garrytan)

-

Han Wang on what to build today (@handotdev)

Comments (0)

Sign in to join the conversation.